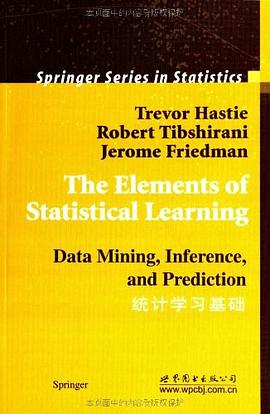

統計學習基礎 pdf epub mobi txt 電子書 下載2025

- 機器學習

- 統計學習

- 統計學

- 數據挖掘

- 數學

- 統計

- 概率論與統計學

- Statistical

- 統計學

- 機器學習

- 數據分析

- 概率論

- 綫性代數

- 迴歸分析

- 分類算法

- 模型評估

- 數據挖掘

- Python應用

具體描述

計算和信息技術的飛速發展帶來瞭醫學、生物學、財經和營銷等諸多領域的海量數據。理解這些數據是一種挑戰,這導緻瞭統計學領域新工具的發展,並延伸到諸如數據挖掘、機器學習和生物信息學等新領域。許多工具都具有共同的基礎,但常常用不同的術語來錶達。《統計學習基礎(第2版)(英文)》介紹瞭這些領域的一些重要概念。盡管應用的是統計學方法,但強調的是概念,而不是數學。許多例子附以彩圖。《統計學習基礎(第2版)(英文)》內容廣泛,從有指導的學習(預測)到無指導的學習,應有盡有。包括神經網絡、支持嚮量機、分類樹和提升等主題,是同類書籍中介紹得最全麵的。

《統計學習基礎(第2版)(英文)》可作為高等院校相關專業本科生和研究生的教材,對於統計學相關人員、科學界和業界關注數據挖掘的人,《統計學習基礎(第2版)(英文)》值得一讀。

著者簡介

作者:(德國)T.黑斯蒂(Trevor Hastie)

圖書目錄

1 Introduction Overview of Supervised Learning

2.1 Introduction

2.2 Variable Types and Terminology

2.3 Two Simple Approaches to Prediction: Least Squares and Nearest Neighbors

2.3.1 Linear Models and Least Squares

2.3.2 Nearest-Neighbor Methods

2.3.3 From Least Squares to Nearest Neighbors

2.4 Statistical Decision Theory

2.5 Local Methods in High Dimensions

2.6 Statistical Models, Supervised Learning and Function Approximation

2.6.1 A Statistical Model for the Joint Distribution Pr(X,Y)

2.6.2 Supervised Learning

2.6.3 Function Approximation

2.7 Structured Regression Models

2.7.1 Difficulty of the Problem

2.8 Classes of Restricted Estimators

2.8.1 Roughness Penalty and Bayesian Methods

2.8.2 Kernel Methods and Local Regression

2.8.3 Basis Functions and Dictionary Methods

2.9 Model Selection and the Bias-Variance Tradeoff

Bibliographic Notes

Exercises

3 Linear Methods for Regression

3.1 Introduction

3.2 Linear Regression Models and Least Squares

3.2.1 Example:Prostate Cancer

3.2.2 The Ganss-Markov Theorem

3.3 Multiple Regression from Simple Univariate Regression

3.3.1 Multiple Outputs

3.4 Subset Selection and Coefficient Shrinkage

3.4.1 Subset Selection

3.4.2 Prostate Cancer Data Example fContinued)

3.4.3 Shrinkage Methods

3.4.4 Methods Using Derived Input Directions

3.4.5 Discussion:A Comparison of the Selection and Shrinkage Methods

3.4.6 Multiple Outcome Shrinkage and Selection

3.5 Compntational Considerations

Bibliographic Notes

Exercises

4 Linear Methods for Classification

4.1 Introduction

4.2 Linear Regression of an Indicator Matrix

4.3 Linear Discriminant Analysis

4.3.1 Regularized Discriminant Analysis

4.3.2 Computations for LDA

4.3.3 Reduced-Rank Linear Discriminant Analysis

4.4 Logistic Regression

4.4.1 Fitting Logistic Regression Models

4.4.2 Example:South African Heart Disease

4.4.3 Quadratic Approximations and Inference

4.4.4 Logistic Regression or LDA7

4.5 Separating Hyper planes

4.5.1 Rosenblatts Perceptron Learning Algorithm

4.5.2 Optimal Separating Hyper planes

Bibliographic Notes

Exercises

5 Basis Expansions and Regularizatlon

5.1 Introduction

5.2 Piecewise Polynomials and Splines

5.2.1 Natural Cubic Splines

5.2.2 Example: South African Heart Disease (Continued)

5.2.3 Example: Phoneme Recognition

5.3 Filtering and Feature Extraction

5.4 Smoothing Splines

5.4.1 Degrees of Freedom and Smoother Matrices

5.5 Automatic Selection of the Smoothing Parameters

5.5.1 Fixing the Degrees of Freedom

5.5.2 The Bias-Variance Tradeoff

5.6 Nonparametric Logistic Regression

5.7 Multidimensional Splines

5.8 Regularization and Reproducing Kernel Hilbert Spaces . .

5.8.1 Spaces of Phnctions Generated by Kernels

5.8.2 Examples of RKHS

5.9 Wavelet Smoothing

5.9.1 Wavelet Bases and the Wavelet Transform

5.9.2 Adaptive Wavelet Filtering

Bibliographic Notes

Exercises

Appendix: Computational Considerations for Splines

Appendix: B-splines

Appendix: Computations for Smoothing Splines

6 Kernel Methods

6.1 One-Dimensional Kernel Smoothers

6.1.1 Local Linear Regression

6.1.2 Local Polynomial Regression

6.2 Selecting the Width of the Kernel

6.3 Local Regression in Jap

6.4 Structured Local Regression Models in ]ap

6.4.1 Structured Kernels

6.4.2 Structured Regression Functions

6.5 Local Likelihood and Other Models

6.6 Kernel Density Estimation and Classification

6.6.1 Kernel Density Estimation

6.6.2 Kernel Density Classification

6.6.3 The Naive Bayes Classifier

6.7 Radial Basis Functions and Kernels

6.8 Mixture Models for Density Estimation and Classification

6.9 Computational Considerations

Bibliographic Notes

Exercises

7 Model Assessment and Selection

7.1 Introduction

7.2 Bias, Variance and Model Complexity

7.3 The Bias-Variance Decomposition

7.3.1 Example: Bias-Variance Tradeoff

7.4 Optimism of the Training Error Rate

7.5 Estimates of In-Sample Prediction Error

7.6 The Effective Number of Parameters

7.7 The Bayesian Approach and BIC

7.8 Minimum Description Length

7.9 Vapnik Chernovenkis Dimension

7.9.1 Example (Continued)

7.10 Cross-Validation

7.11 Bootstrap Methods

7.11.1 Example (Continued)

Bibliographic Notes

Exercises

8 Model Inference and Averaging

8.1 Introduction

8.2 The Bootstrap and Maximum Likelihood Methods

8.2.1 A Smoothing Example

8.2.2 Maximum Likelihood Inference

8.2.3 Bootstrap versus Maximum Likelihood

8.3 Bayesian Methods

8.4 Relationship Between the Bootstrap and Bayesian Inference

8.5 The EM Algorithm

8.5.1 Two-Component Mixture Model

8.5.2 The EM Algorithm in General

8.5.3 EM as a Maximization-Maximization Procedure

8.6 MCMC for Sampling from the Posterior

8.7 Bagging

8.7.1 Example: Trees with Simulated Data

8.8 Model Averaging and Stacking

8.9 Stochastic Search: Bumping

Bibliographic Notes

Exercises

9 Additive Models, Trees, and Related Methods

9.1 Generalized Additive Models

9.1.1 Fitting Additive Models

9.1.2 Example: Additive Logistic Regression

9.1.3 Summary

9.2 Tree Based Methods

10 Boosting and Additive Trees

11 Neural Networks

12 Support Vector Machines and Flexible Discriminants

13 Prototype Methods and Nearest-Neighbors

14 Unsupervised Learning

References

Author Index

Index

· · · · · · (收起)

讀後感

http://www-stat.stanford.edu/~hastie/local.ftp/Springer/ESLII_print3.pdf

評分非常难,一点都不element,是本百科全书式的读物,如果是初学者,不建议读 很多章节也没有细节,概述性的东西,能看懂几章就很不错了 其实每章都可以写成一本书,都可以做很多篇的论文 全部读懂非常非常难,倒是作为用到哪个部分作为参考资料查查很不错

評分The methodology used in the books are fancy and attractive, yet in terms of rigorous proofs, sometimes the book skip steps and is difficult to follow. ~ Slightly sophisticated for undergraduate students, but in general is a very nice book.

評分对于新手来说,这本书和PRML比起来差太远,新手强烈建议去读PRML,接下来再看这本书。。我就举个最简单的例子吧,这本书的第二章overview of supervised learning和PRML的introduction差太远了。。。。读这本书的overview如果读者没有基础几乎不知所云。。但是PRML通过一个例子...

評分英文原版的官方免费下载链接已经有人在书评中给出了 中文版的译者很可能没有基本的数学知识,而是用Google翻译完成了这部作品。 超平面的Normal equation (法线方程)翻译成了“平面上的标准方程”;而稍有高中髙维几何常识的人都知道,法线是正交与该超平面的方向,而绝不可...

用戶評價

Statistical Learning 最經典的入門教材。很多Machine Learning的書,沒有關注太多模型背後的原理。但是要做好Machine Learning,這些必不可少。另,Youtube上可以搜到Hastie和小夥伴前些年的授課視頻。

评分Statistical Learning 最經典的入門教材。很多Machine Learning的書,沒有關注太多模型背後的原理。但是要做好Machine Learning,這些必不可少。另,Youtube上可以搜到Hastie和小夥伴前些年的授課視頻。

评分Will be a classic

评分Will be a classic

评分Statistical Learning 最經典的入門教材。很多Machine Learning的書,沒有關注太多模型背後的原理。但是要做好Machine Learning,這些必不可少。另,Youtube上可以搜到Hastie和小夥伴前些年的授課視頻。

相關圖書

本站所有內容均為互聯網搜索引擎提供的公開搜索信息,本站不存儲任何數據與內容,任何內容與數據均與本站無關,如有需要請聯繫相關搜索引擎包括但不限於百度,google,bing,sogou 等

© 2025 book.quotespace.org All Rights Reserved. 小美書屋 版权所有